The current wave of deadly civil unrest sparked by police and white supremacist killings of unarmed black people including Ahmaud Arbery, George Floyd and Breonna Taylor have brought increased scrutiny of tech companies’ business dealings with law enforcement agencies. This, in turn, has resulted in some leading tech firms reexamining their relationships with police and other government agencies. Within 24 hours of each other, Amazon and Microsoft this week announced they were placing moratoriums on the sale of facial recognition technology to US law enforcement agencies, citing the need for better government regulation to govern its use.

In a company blog post, Amazon said on Wednesday that it would not enable police use of its Rekognition technology except in cases involving anti-human trafficking efforts and reuniting missing children with their families. “We’ve advocated that governments should put in place stronger regulations to govern the ethical use of facial recognition technology, and in recent days, Congress appears ready to take on this challenge,” the post said. “We hope this one-year moratorium might give Congress enough time to implement appropriate rules, and we stand ready to help if requested.”

On Thursday, Microsoft announced that it would wait for federal regulations to be enacted before it sells any facial recognition technology to police departments. “We do not sell… to US police departments today, and until there is a strong national law grounded in human rights, we will not sell this technology to police,” the company said.

The move by the two tech giants comes days after IBM announced that it was getting out of the facial recognition business altogether. The company said it would no longer offer or develop general purpose facial recognition or analysis software. In a letter to Congress, IBM CEO Arvind Krishna said that the company “firmly opposes and will not condone uses of any [facial recognition] technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency.”

“We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies,” Krishna added.

Some critics accused Microsoft of hypocrisy, as the company has been accused of betraying its own artificial intelligence (AI) principles by investing in AnyVision, an Israeli company that produces facial recognition technology used to monitor and control Palestinians living in the illegally-occupied West Bank.

Amazon’s move is more substantive than symbolic, as the company sells Rekognition to law enforcement agencies across the country. Rekognition, which Amazon says “provides highly accurate facial analysis and facial search capabilities that you can use to detect, analyze, and compare faces for a wide variety of user verification, people counting, and public safety use cases,” has reportedly been pitched to agencies including Immigration and Customs Enforcement (ICE). Human rights activists were outraged that Amazon would even consider selling this technology to an agency which is operating concentration camps for undocumented immigrants.

Rekognition was created by the company’s Amazon Web Services (AWS) division, whose CEO, Andy Jassy openly admits that he does not know how many agencies are currently using it. “I don’t think we know the total number of police departments that are using [Amazon’s] facial recognition technology,” Jassy said in a recently-aired episode of PBS Frontline, “Amazon Empire: The Rise and Reign of Jeff Bezos.”

“We have 165 services in our technology infrastructure platform, and you can use them in any combination you want,” he added. Jassy’s admission strikes some observers as curious given Amazon’s culture of “customer obsession” and for tracking user behavior in the most minute detail.

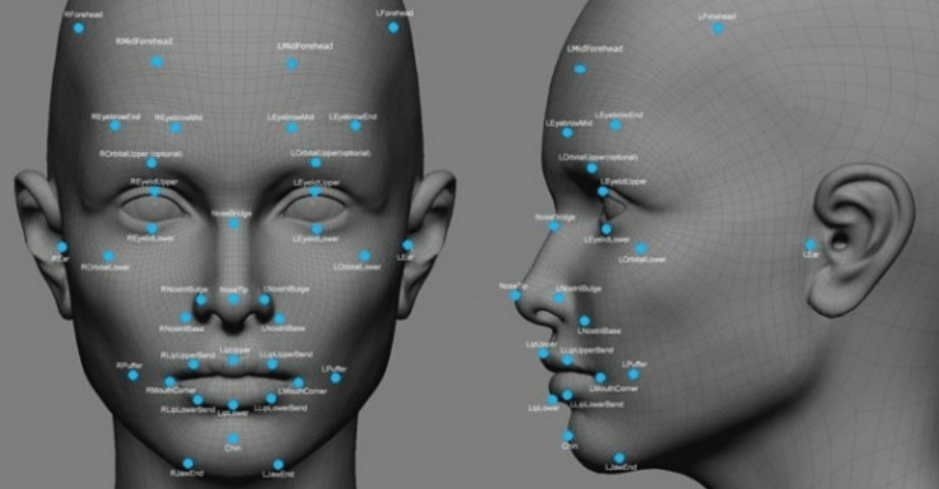

Government and law enforcement use of facial recognition has drawn the opposition of human rights activists and digital privacy advocates because of its potential for abuse, as well as for its alarming rate of misidentification of people (especially women) of color, which critics say all but guarantees that certain vulnerable and over-policed groups will be falsely prosecuted for crimes they did not commit.

There are currently no transparent rules regulating the use and sharing of facial recognition data. Congress, however, has been working out bipartisan legislation to regulate facial recognition use by government, law enforcement and the private sector. The House Oversight and Reform Committee has held several hearings on the issue over the past year.

In the meantime, state and local governments have stepped up to address the issue. Last October, California became the third state after Oregon and New Hampshire to ban facial recognition software in police body cameras. This followed moves by cities including San Francisco and Oakland to prohibit law enforcement and other agency use of the technology.

“The movement to ban face recognition is gaining momentum,” writes Matthew Guariglia of the Electronic Frontier Foundation (EFF), a San Francisco-based digital advocacy group. “The historic demonstrations of the past two weeks show that the public will not sit idly by while tech companies enable and profit off of a system of surveillance and policing that hurts so many.”

Ethics In Tech partner Electronic Frontier Alliance (EFA), a project of EFF, will be hosting a panel discussion on facial recognition in Portland, Oregon on June 15th at 6:00 pm. Scheduled panelists include Portland City Commissioner Jo Ann Hardesty, Sarah Hamid of the Council on American-Islamic Relations (CAIR) Oregon, Clare Garvie of the Center on Privacy and Technology at Georgetown University Law Center and EFA lead coordinator Nathan “Nash” Sheard.